3 Methods for the Elven Kings Under the Sky

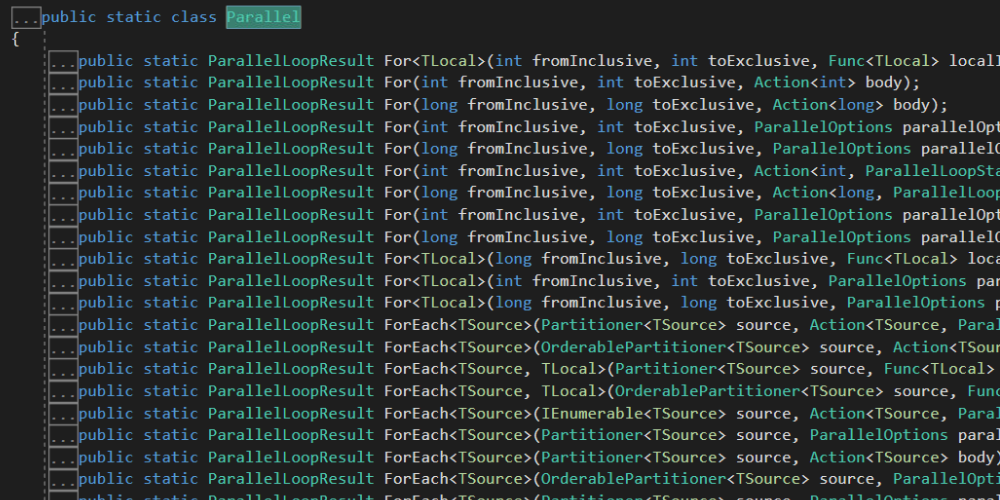

Today I want to talk to you about the Parallel static class. This class provides support for parallelizing loops and regions, and just like PLINQ, it’s really easy to use. It’s worth noting that it’s specially optimized for iterations, and in this context it performs a bit better than PLINQ. There’s no significant difference in absolute times, but you can see it perfectly if you use Visual Studio 2010’s magnificent profiler. However, there might be situations where you really need to fine-tune performance in iterations, and this is where it makes the most sense to use two of the three methods of this class: For and ForEach. We’ll call the third one Cirdan and it will barely appear in this story (I’m actually referring to Invoke, but it won’t show up here either).

Understanding Actions

Both methods have a very similar signature in their simplest form. They both iterate over a series of instructions, performing each one n times. And this is where we see Action-type parameters appear:

public static ParallelLoopResult For

(int fromInclusive, int toExclusive, Action<int> body)

public static ParallelLoopResult ForEach<TSource>

(IEnumerable<TSource> source, Action<TSource> body)An Action

I don’t want to start rambling about functional programming now, although I do want to emphasize the use of Actions and how important they’ve become in recent years. In fact, I recently dedicated a post to how Action and Func have greatly simplified working with delegates for developers.

The Parallel.For Method

But getting back to the topic at hand, if we look at the signature of the Parallel.For method we can see that in the important aspects it doesn’t differ too much from its lifelong for counterpart: Both have a start, an end, and actions to perform a certain number of times.

So starting from the IsPrime method we already used in the previous post about PLINQ, let’s see a comparison between the syntax of these two methods:

for (int i = 0; i < 100; i++)

{

if(i.IsPrime())

Console.WriteLine(string.Format("{0} is prime", i));

else

Console.WriteLine(string.Format("{0} is not prime", i));

}

Parallel.For(0, 100, (i) =>

{

if (i.IsPrime())

Console.WriteLine(string.Format("{0} is prime", i));

else

Console.WriteLine(string.Format("{0} is not prime", i));

});In both cases we have a series of lines that must be executed 100 times. Specifically from 0 to 99, since the upper element is not included in either case. Only the use of Action

So, let’s parallelize

Looking at it this way, it should be extremely simple to transform all our loops this way, so should we do it? The answer is NO.

Sometimes we won’t get performance from parallelizing, since if the work to be done is minimal, we’ll spend more time dividing the work into different threads, executing them and consolidating the information than executing the task without parallelizing. We might also encounter an external bottleneck in an I/O device, like a port, a remote server, or a socket.

Another clear example of this is nested loops. It’s common to nest several for or foreach structures to perform certain algorithms. In this case, the candidate to be parallelized is always the outer loop and it’s not necessary (in fact it would be counterproductive) to parallelize the inner loops:

Parallel.For(0, 100, (z) =>

{

for (int i = 0; i < 100; i++)

{

if (i.IsPrime())

Console.WriteLine(string.Format("{0} is prime", i));

else

Console.WriteLine(string.Format("{0} is not prime", i));

}

});This is pretty obvious, since if we parallelize the outer loop we’d need a computer with 100 cores and evidently those don’t exist yet, so the TPL has to group these tasks to adapt them to the available cores, taking some time to do the synchronization (similar to the first examples with monkeys in the series). Imagine then if we parallelize both loops: 100 x 100 = 10,000 cores? It simply doesn’t make sense.

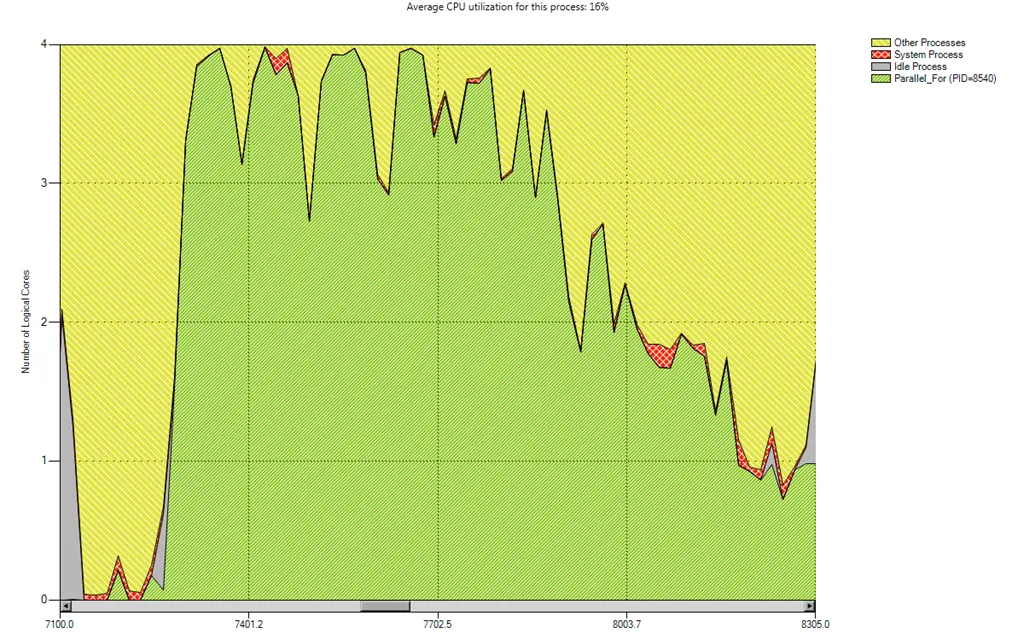

My advice is that in all cases where you decide to parallelize a loop (and this also applies to PLINQ queries), you should first do a performance comparison.

The Parallel.ForEach Method

As for the ForEach method, it’s practically the same as the previous one except that we don’t have a start and end, but an input data sequence (based on IEnumerable, like PLINQ) and a variable we use to iterate through each of the elements in the sequence and perform a series of actions.

Consider the following code:

List<FeedDefinition> feeds = new List<FeedDefinition>();

clock.Restart();

var blogs = FeedsEngine.GetBlogsUrls();

foreach (var blog in blogs)

{

feeds.AddRange(FeedsEngine.GetBlogFeeds(blog));

}

clock.Stop();

this.Text = clock.ElapsedMilliseconds.ToString("n2");

feeds.ForEach(p => Console.WriteLine(p.Name));Assuming we have a FeedsEngine.GetBlogsUrls method that returns a list of URLs that provide RSS content, the above code connects to each of the URLs and tries to download all the post information through a FeedsEngine.GetBlogFeeds(blog) method.

As you can imagine, this totally sequential process is a serious candidate for parallelization, since most of the time in this process is wasted time trying to connect to an external server and waiting for it to respond to requests. In this case, parallelizing will be of great help, although it’s important to understand that in this case the performance gain won’t be from using more local power, but from launching requests to different servers asynchronously.

So, it’s enough to change the foreach loop part to its parallelized version:

Parallel.ForEach(blogs, (blog) =>

{

feeds.AddRange(FeedsEngine.GetBlogFeeds(blog));

});In which we define the data sequence to use and declare the blog variable on the fly (the compiler automatically infers the type) to the left of the lambda expression, and to the right the actions we want to perform, which are exactly the same as the previous foreach version.

And we’ll see how it runs much faster. On my workstation we went from 6.7 seconds to 1.4, which isn’t bad at all.

Exploring More Options

In the Parallel class, just like in PLINQ queries, there’s the possibility to specify the degree of parallelism as well as cancel the execution of a loop. We just need to use one of the overloads that uses a ParallelOptions type object.

private void button11_Click(object sender, EventArgs e)

{

CancellationTokenSource cs = new CancellationTokenSource();

var cores = Environment.ProcessorCount;

clock.Restart();

var options = new ParallelOptions() {

MaxDegreeOfParallelism = cores / 2,

CancellationToken= cs.Token };

try

{

Parallel.For(1, 10, options,

(i) =>

{

dowork_cancel(i, cs);

});

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}

clock.Stop();

this.Text = clock.ElapsedMilliseconds.ToString("n2");

}

void dowork_cancel(int i, CancellationTokenSource cs)

{

Thread.Sleep(1000);

if (i == 5) cs.Cancel();

}In the above case we specify a parallelization degree of half the number of cores and prepare the query for possible cancellation (something we simulate inside the dowork_cancel method when the counter reaches 5).

Coming up next…

In the next post we’ll see how to use the Task class to create, execute, chain, and manage tasks asynchronously.

Resistance is futile.