A Bit of History

Parallel programming isn’t anything new. It was already around back in my student days more than 20 years ago :cry: and nowadays, since the arrival of .NET Framework 4.0, it’s more alive than ever thanks to the Task Parallel Library or TPL.

However, saying that the TPL is only for performing asynchronous tasks is like saying a smartphone is only for making phone calls. It’s more, much more. And it’s precisely with this library that we’re going to dive into the fascinating world of parallel programming. This discipline has traditionally been associated with the highest technical profiles and reserved for special occasions.

But from now on, thanks to the TPL, it’s going to be accessible to all kinds of developers, and it’s going to become something very important - something that every good developer should add to their asset list. In fact, it’s going to be an essential part of the immediate future of application development at all levels.

But what really is parallel programming? We can think of it as the possibility of dividing a long and heavy task into several shorter tasks, and executing them at the same time, so that it takes much less time than the original task.

Encyclopedias and Monkeys

Let’s suppose we have to copy the 200 volumes of the great galactic encyclopedia of Terminus. Obviously, it’s not the same to copy them one after another as it is to hire 200 trained mutant monkeys to copy, and sit them at 200 desks with their 200 pens to copy the 200 books. It’s clear that - if possible - the second option would be much faster.

The more resources (desks and pens) the faster we’ll finish the task

But what happens if we only have 100 desks and pens? Well, the monkeys are going to have to queue up and wait their turn, so when one of the monkeys finishes or gets tired of writing, they’ll have to give up their turn to the waiting monkey, causing some queues and anger from the monkeys along the way, who are good workers but a bit particular.

All in all, unless a war breaks out, it will always be faster than the first option, but that gives us our first conclusion: the more resources (desks and pens) the faster we’ll finish the task. And we’ll also have to worry less about managing the monkeys’ turns and waits, with everything that entails.

Because as we’ll see later, on many occasions when working with monkeys or with threads synchronization time is crucial, and can mark the difference between success and failure of our application.

Dead Laws and Quantum Physics

However, until now we’ve been doing pretty well with traditional programming, so why does it seem like learning this new paradigm is now a matter of life and death? I mean, usually until now there weren’t too many occasions where an application had to resort to asynchrony or parallelism (which as we’ll see later isn’t exactly the same thing). Why now?

Matter exhibits quantum effects that would require different technology to continue performing calculations at that level

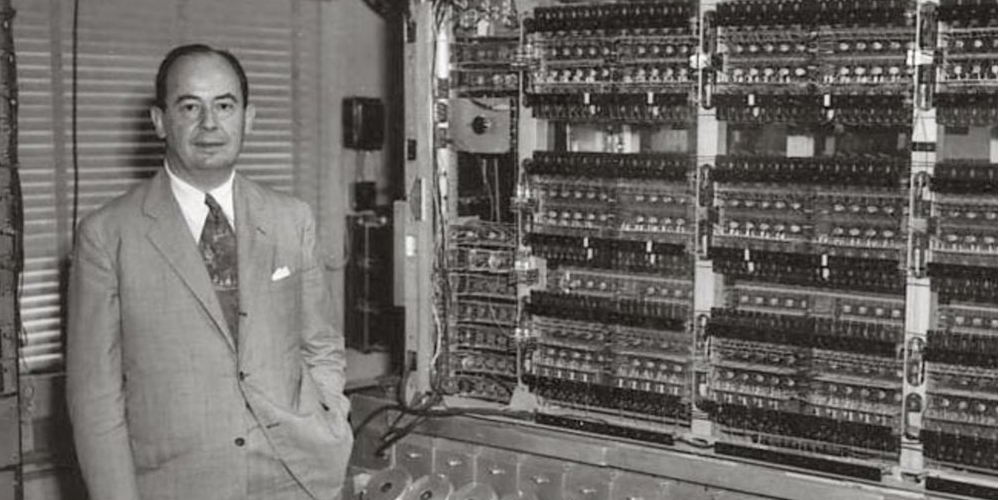

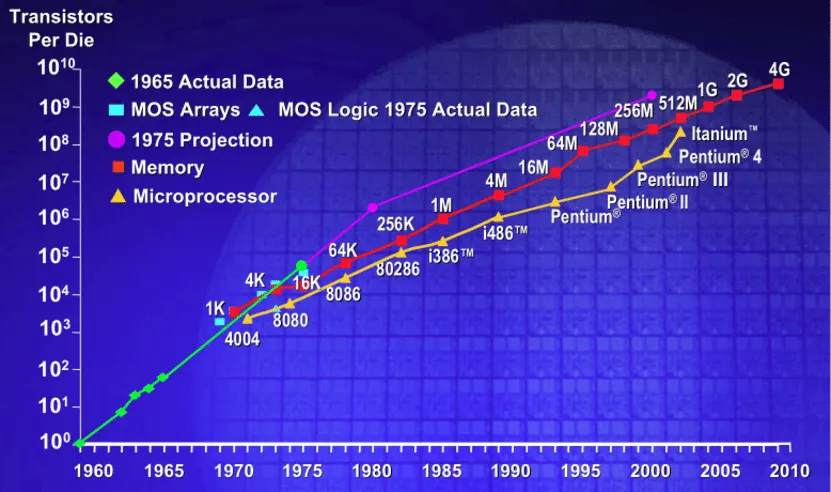

The answer is simple - it seems the good life is ending. If there’s been one constant in IT over the last 45 years, it’s the one described by Moore’s law: In 1965, Gordon Moore, one of Intel’s founders, predicted that every 2 years (18 months initially) the number of components in an integrated circuit would double. And it’s been followed to the letter until today, although in recent years certain limits are being reached that prevent this law from continuing to be fulfilled.

To simplify a bit, we could say there are a couple of problems: The scaling of microprocessor frequency and the heat generated by them. The first refers to the difficulty of continuing to increase the speed of microprocessors, mainly because the technology used to design them is currently close to 32 nanometers and the physical limit before matter experiences changes is calculated to be between 22 and 18 nanometers. This limit is expected to be reached in approximately just two or three years, around 2014. Just around the corner.

Once that level of miniaturization is reached, in the words of scientist Stephen Hawking: “Matter exhibits quantum effects that would require different technology to continue performing calculations at that level.”

The second problem is linked to the first, and it’s that in recent years the heat generated by microprocessors has been increasing exponentially, and in terms of power density it’s already equal to the heat generated by a rocket nozzle. The worst part is that increasing frequency by only 5 to 10 percent each year has a cost of almost doubling the temperature.

By this I don’t mean that faster computers can’t be manufactured in the future. I mean that if these predictions are accurate, faster microprocessors can’t be manufactured with current technology. Maybe it will be possible if we discover how to build computers that use optical technology, nano-engineering to create transistors based on nanotubes that take advantage of the so-called tunnel effect, or any other concept yet to be discovered. But for now we can’t count on it.

Updated 2011 predictions to 2025

1. Predictions about scaling limits were both right and wrong.

- Back then, people saw ~22 nm as a hard wall because below that, quantum effects (like tunneling) were expected to make transistors unreliable.

- In reality, chipmakers found clever engineering solutions — like new transistor architectures (FinFET in 2011–2012, and now gate-all-around nanosheets) — that let scaling continue below 22 nm.

2. Frequency scaling did stall.

- The text correctly identifies the “frequency wall.” Around 2004–2005, CPUs stopped ramping up in GHz because of heat and power consumption. Even in 2025, we’re still mostly in the 3–5 GHz range for mainstream CPUs.

- Progress shifted to parallelism (more cores) and architectural efficiency instead of raw clock speeds.

3. Heat is still a defining challenge.

- The power density problem hasn’t gone away. Modern CPUs and GPUs use sophisticated cooling, power gating, and dynamic frequency scaling to keep thermals in check.

4. The roadmap extended much further than expected.

- By 2025, we’ve gone well past the 22 nm “limit.” Commercial chips are at 5 nm and 3 nm, with 2 nm in pilot production. So the “end of Moore’s law” has been more of a gradual slowdown than a hard stop.

5. The shift in mindset is key.

- In 2011, the discussion was mostly about shrinking transistors.

- Today, innovation also comes from chiplet architectures, 3D stacking, advanced packaging, AI-specialized accelerators, and materials research. In other words, the industry adapted by broadening the definition of progress.

👉 In summary, 2011 people knew limits were coming, but underestimated how much engineering ingenuity would stretch them out.

Deus Ex Machina

If humans have been characterized by anything, it’s their great ability to solve problems (leaving aside their not insignificant ability to cause them), so it’s been a few years now since one of the solutions to this problem has started to be developed and manufactured.

In fact, nowadays it has become almost commonplace: It’s about manufacturing processors with multiple cores, which share the work - like the monkeys - thus achieving increased speed. Not because they’re getting faster and faster, but because there are more and more resources working at the same time.

Something similar - with all due differences - to the human brain, which compared to a computer is quite slower, but its parallel processing capacity thanks to its millions of connections between neurons has no rival with any other element known in nature or created by man.

Coming up next…

In the next post we’ll clarify some basic but necessary concepts when developing applications that make use of asynchronous programming.

Resistance is futile.