Operating System Elements

When we talk about parallel programming, it’s good to have some operating system level concepts pretty clear. In this section we’ll try to clarify these terms, since we’ll be using them frequently later on.

Processes

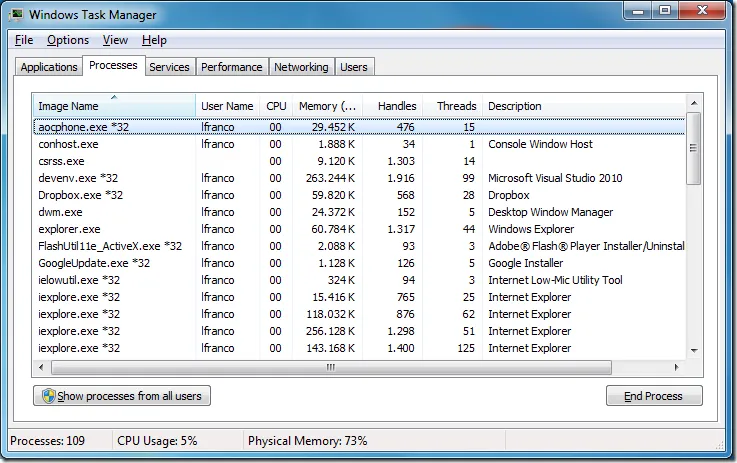

Every application running on the operating system exists within the context of a process, although not all processes correspond to visible applications. Just open Task Manager to see that the list of processes is much larger than the list of applications. That’s because they can correspond to services, non-visible applications, or because some applications are designed to create multiple processes (hello +insert your favorite browser name here+ :smile:).

A process provides the necessary resources to execute a program. It contains virtual memory space, executable code, a security context, a unique process identifier, environment variables, and at least one execution thread.

Each process starts with a single thread, often called the main thread. But it can create additional threads, which can be used to handle different tasks.

Making calls between processes is complex and very expensive in terms of performance because special mechanisms like pipes, sockets, or RPC (Remote Procedure Call) calls must be used.

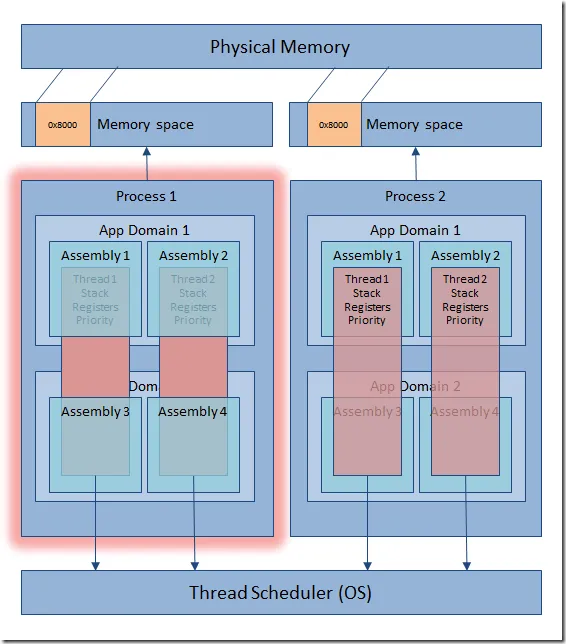

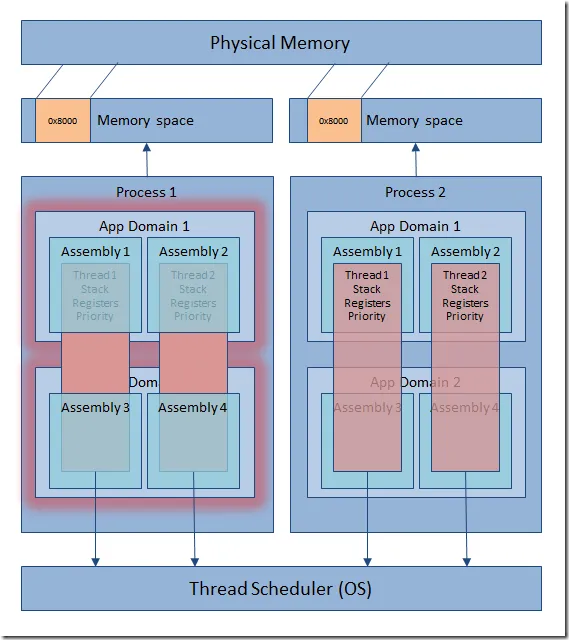

.NET Application Domains (AppDomains)

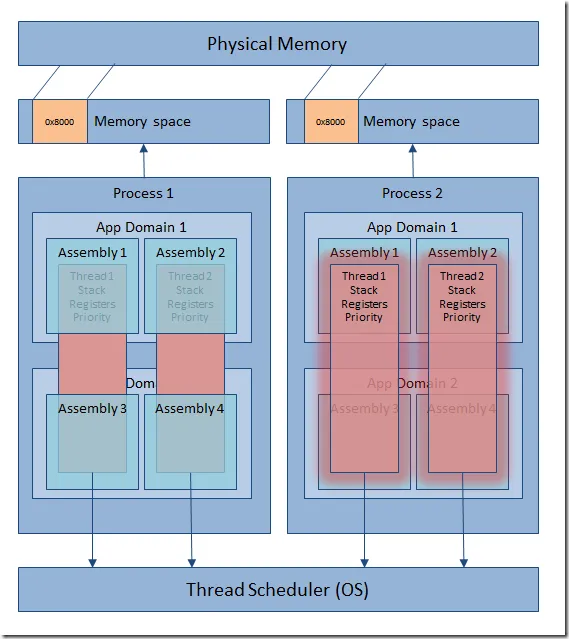

Since .NET is a platform that executes managed code, the processes created when running these applications are a bit different, because when .NET was designed, one of the main concerns was to try to improve the handling of traditional or unmanaged processes. That’s why the concept of application domain was created, which could be defined as a logical process within the operating system process.

The big difference is that within a process we can create different application domains and load various assemblies into each of them, taking advantage of the fact that calls between different application domains and the assemblies they contain are much faster than between processes. If one of these assemblies needs to be shared between two application domains, it gets copied into each domain.

This way, by using application domains you get the advantage of isolating code from one process to another, but without paying the overhead of making calls between processes.

Threads

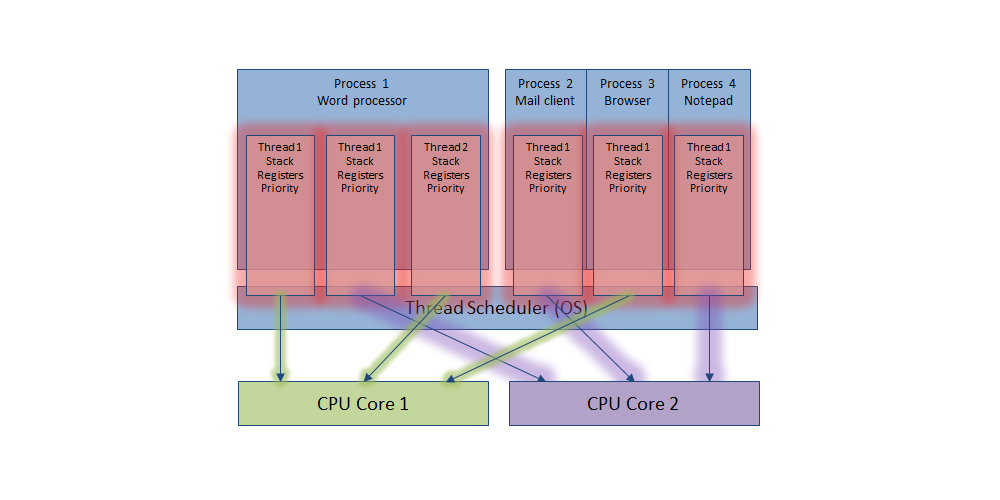

A thread is the entity within a process responsible for executing code. All threads contained in a process share its resources and virtual memory, and maintain exception handlers, scheduling priority, local storage, and a unique thread identifier.

Threads are independent of application domains, so we can think of them as cross-cutting elements that can jump from one to another over time. There’s no correspondence between the number of threads and application domains.

By default, all processes are created with a default thread called the main thread, although in .NET applications at least two are created, since a second thread is needed to manage the garbage collector. However, each process can create an almost unlimited number of them, although ultimately the operating system always has the power to downgrade these threads or even freeze them.

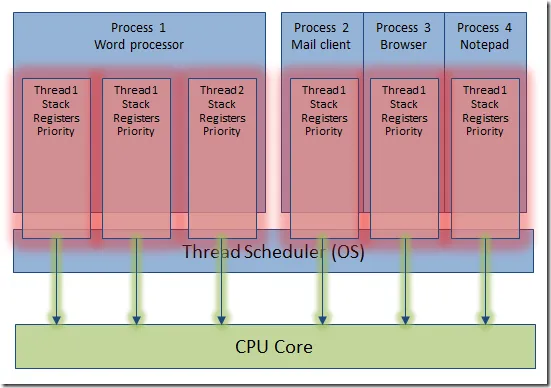

Performing context switches between threads is much faster than process context switches. In fact, in operating systems that use preemptive multitasking (the vast majority nowadays), the operating system gives a small fraction of time to each of the threads of each of the loaded processes so they can execute a portion of their executable code, giving the sensation that multiple applications are running at the same time.

This type of multitasking has the advantage over its predecessors that if a process stops responding, the system doesn’t collapse and can continue responding without being affected by its crash. In practice, this has meant the near disappearance of the so-called BSOD (Blue Screen Of Death) caused by this reason.

Multithreading

The ability that processes have to create different threads executing simultaneously is what we call Multithreading. And it has allowed us to simulate multitasking on personal computers for the last decade and a half. This is because although there’s physically only one microprocessor, in terms of the operating system it gives a period of time to each thread of each of the processes loaded in the system, and by repeating this over and over very quickly, it produces the sensation that all applications are running at the same time, but nothing could be further from the truth. At least until recently.

Parallelism

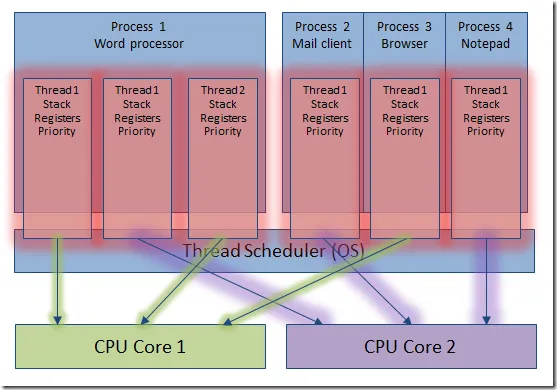

With the appearance of the first microprocessors with multiple cores, it was finally possible to execute code in parallel and achieve the much-desired real multitasking, since different threads can execute on different cores at the same time. So the more cores, the more threads can execute and therefore more code at the same time.

It’s been barely four or five years since the first dual cores appeared, only a little later quad cores appeared, and nowadays it’s quite common to see workstations with 8 and up to 16 cores. As for the future, nobody knows at what rate this technology will evolve, but the folks at Intel leaked images of a Windows Server with 256 cores more than two years ago. Even the super light processors for phones and tablets based on ARM architecture are starting to release two and four core models.

Going back to the monkey example, it’s very tempting to think that if we take advantage of all the power of the new cores we can get spectacular performance gains and write the 200 volumes in the time it takes to write one of them.

Obviously this statement is a bit exaggerated, since there’s always going to be hard work synchronizing between the different monkeys… sorry, threads. Still, the gain is spectacular, easily reaching x5 or x6 multipliers in an 8-core environment. Something not to be underestimated in certain processes. So seeing the number of cores we’re moving towards, in my opinion it becomes imperative to know - if not master - the TPL.

Before We Finish, Some Advice

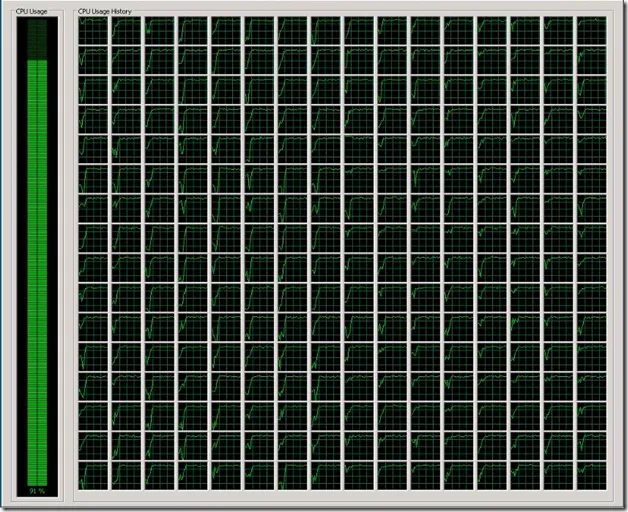

As we’ve seen, for now real parallelism can only exist on a machine with multiple cores. Otherwise the code will work without errors but only using the single core of the machine. So a pretty common mistake among developers is to use virtual machines for development, and forget that most don’t allow you to specify multiple cores. So it’s quite likely that at some point we’ll find ourselves grumbling because well-written code doesn’t get any performance gains when we run it inside a virtual machine :angry:

Coming up next…

In the next post we’ll see how to extend LINQ with Parallel LINQ, and thus give our queries over enumerable lists parallelism, with almost no impact on current code.

Resistance is futile.